Structured Technology Evaluation Framework for Scalable, Secure, and Production-Ready Systems – v1.0

⚠️ Disclaimer: This framework reflects my independent professional judgment and does not represent the official views of my employer or any affiliated organization. It is made publicly available for reference by peers, award organizers, competition judges, and the broader technology community.

Note: ChatGPT was used as a tool to assist with visual diagram generation and grammatical refinement. The evaluation framework, methodology, criteria, and professional judgment documented herein are entirely my own work based on my judging experience.

About the Author's Judging Expertise

As a Staff Software Engineer at Walmart Global Tech and a volunteer member of the American Association of Information Technology Professionals (AAITP), I serve as a judge and reviewer for technology competitions, awards, and peer-review settings. My evaluations span cloud-native systems, distributed microservices, fintech-grade platforms, and AI-integrated engineering solutions. Across these reviews, I focus on technical merit and real-world viability, particularly architectural soundness, scalability under load, security and reliability by design, and evidence-supported impact. The methodology presented here documents the consistent approach I use to assess submissions fairly across varying levels of maturity and presentation quality.

Purpose

Modern technology solutions including cloud-native microservices, distributed systems, AI-integrated platforms, and fintech applications are evaluated across many settings with industry awards, developer challenges, hackathons, open-source reviews, and technical publications. While these contexts differ, evaluators face a common problems like distinguishing compelling presentations from solutions that are technically sound, scalable, secure, reliable, and meaningful in real-world environments.

This document codifies my evaluation approach to support consistent, evidence-based technical assessment, especially when comparing submissions that vary widely in scope, team size, and documentation.

Scope

This methodology is intended for assessing software systems and technology solutions commonly encountered in professional and community evaluation settings, including distributed architectures, cloud-native applications, microservices, performance-critical platforms, and related engineering work.

Why This Approach Exists

Evaluating engineering work is super difficult. Strong marketing, impressive demos, or sophisticated terminology can sometimes overshadow genuine technical quality, while understated but robust solutions may be undervalued.

This methodology exists to help separate presentation from substance by prioritizing:

- • Independent assessment of technical merit

- • Balance between innovation and real-world feasibility

- • Explicit consideration of scalability, security, reliability, and long-term maintainability alongside novelty

By applying consistent principles, evaluations become more transparent, repeatable, and defensible.

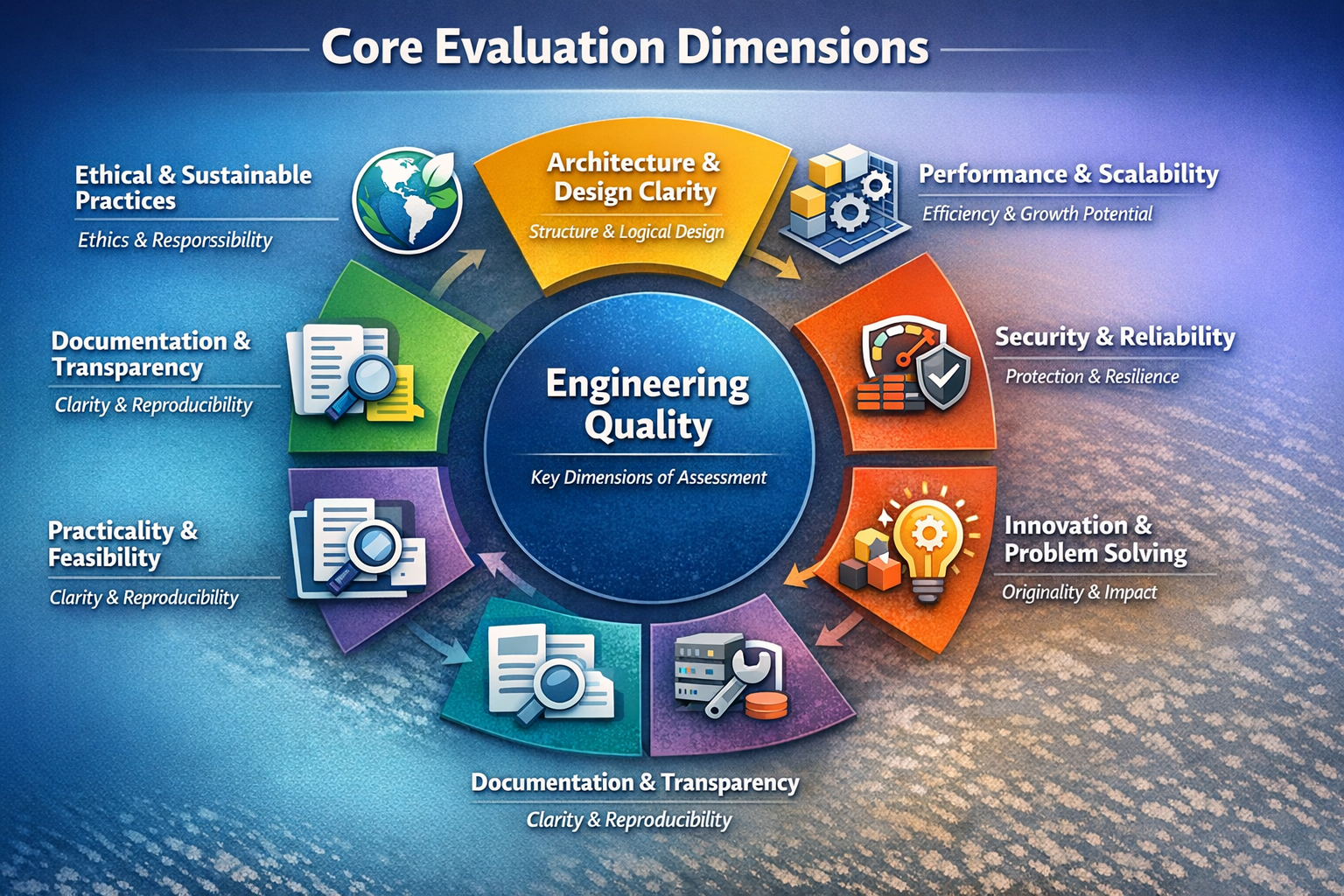

Core Evaluation Dimensions

The evaluation rests on seven fundamental dimensions of engineering quality. Each applies across domains but is weighted contextually depending on the specific review (e.g., hackhackathon vs. enterprise award).

1. Architecture Soundness and Design Clarity

Assesses whether the system is thoughtfully structured, modular, and clearly explained. Key checks include logical layering, appropriate separation of concerns, justified design decisions, and reasonable complexity for maintainability and future extension.

2. Performance and Scalability

Evaluates whether the solution can handle realistic growth in load, data volume, or users. Evidence of performance testing, load-handling strategies, bottleneck mitigation, and horizontal/vertical scaling approaches is critical. Solutions that function only at small scale receive lower consideration for production readiness.

3. Security and Reliability

Examines whether security and resilience are designed in from the beginning rather than bolted on. Focus areas include data protection, authentication/authorization, threat modeling, failure handling, graceful degradation, and recovery mechanisms under stress or attack.

4. Innovation and Problem-Solving Impact

Measures the originality and practical relevance of the solution. Strong entries clearly define a meaningful problem, demonstrate measurable improvement over existing approaches, and deliver tangible technical or business value.

5. Practicality and Implementation Feasibility

Considers real-world constraints like appropriateness of the chosen technology stack, alignment with industry maturity levels, operational considerations (deployment, monitoring, cost), and long-term maintainability/sustainability.

6. Documentation and Transparency

Evaluates clarity of explanation, reproducibility of results (where applicable), and honest discussion of limitations, trade-offs, and assumptions. High-quality documentation enables fair assessment and supports knowledge transfer.

7. Ethical and Sustainable Engineering Practices

Reviews awareness of broader responsibilities like data privacy, responsible AI/data usage (where relevant), fairness/inclusivity considerations, and attention to operational/environmental sustainability over time.

How This Approach Is Applied

This methodology is flexible and context-aware. While the seven dimensions remain constant, emphasis shifts depending on the evaluation setting:

- Industry awards & enterprise competitions: Heavy weight on scalability, security, reliability, practicality, and production readiness.

- Hackathons & developer challenges: Greater emphasis on innovation, clarity, and implementation feasibility within time constraints.

- Open-source / technical publication reviews: Strong focus on documentation, reproducibility, architectural soundness, and ethical considerations.

This adaptability ensures consistent expert judgment without imposing rigid, one-size-fits-all scoring.

Context-Specific Applications

While the core seven dimensions remain consistent, their relative weighting and specific focus areas adapt to the evaluation context. The following sections provide specialized guidance for each context:

Application in Journal Peer Reviews

Context

Peer-reviewed journals play a critical role in shaping the future of academic research, industry practice, and policy decisions. As a reviewer, my responsibility is twofold: first, to recognize genuine novelty and contribution in the research, and second, to ensure methodological rigor, clarity, and reproducibility. Judging a paper is not about personal preference or style; rather, it is about assessing whether the study is technically sound, clearly reasoned, and trustworthy. This is especially important in academic peer review, where the credibility of a study directly impacts its contribution to the field.

How I Apply This Framework in Journal Reviews

In reviewing journal submissions, the Technology Evaluation Framework is applied to assess each manuscript systematically across its full lifecycle, from problem formulation through conclusions. This approach ensures that every paper is evaluated with clarity, consistency, and thoroughness, with attention to the core aspects that determine its technical validity and overall impact.

Clarity of Purpose and Research Question

The first step in evaluating any journal submission is understanding the research problem and the question the study seeks to answer. A strong paper begins by clearly articulating the problem it addresses, explaining why this problem is significant in the broader research context, and presenting a focused research question. This clarity sets the foundation for the rest of the study. If the research question is unclear or misaligned with the methodology, it weakens the entire paper's credibility.

Abstract, Introduction, and Literature Context

I pay close attention to the abstract and introduction, which should briefly summarize the study's aims, methods, key findings, and conclusions. The abstract must be able to stand alone, providing enough information for the reader to understand the essence of the paper without needing to read the entire manuscript. The introduction should offer a comprehensive overview of the current state of the field, identifying gaps or unresolved questions in existing research, and logically explaining why this study is needed. A precise and well-structured introduction builds a strong foundation for the paper.

Study Design and Methodological Rigor

The study design is evaluated to determine whether it is appropriate for addressing the stated research question. Methods are expected to be described in sufficient detail to allow replication, including a clear explanation of the data collection process, the tools and techniques used, and any controls or validation steps applied. Transparency in methodology is essential. When methods are insufficiently described, it raises concerns about the reliability and reproducibility of the results. Attention is also given to whether potential sources of bias are clearly acknowledged and appropriately mitigated, as such factors can significantly influence study outcomes.

For empirical studies, robust experimental design is expected. This includes appropriate sample sizes, the use of control groups where applicable, and clearly described statistical methods. The presence of validation steps and controls is critical to ensuring that reported results are meaningful, credible, and reproducible.

Data Presentation and Result Integrity

The integrity of the results is a critical aspect of any study. Evaluation focuses on how data is presented, ensuring that figures and tables are clearly labeled and consistent with the accompanying text. Results should align fully with the described methodology and provide a transparent and honest account of the findings. Clear statistical reporting—including confidence intervals and error margins where relevant—is also examined to assess the robustness and reliability of the results.

Furthermore, the consistency between the written content, data visuals, and tables is essential. If there are discrepancies or signs of selective reporting, this would raise significant concerns about the study's credibility.

Interpretation and Logical Reasoning

Once the data is presented, I evaluate how logically the authors interpret the results. The conclusions should be directly supported by the data and should reflect the evidence presented. Claims should be proportionate to the findings, avoiding overstatement or unwarranted extrapolations. A strong study will also discuss its limitations and acknowledge any weaknesses in the methodology or results.

A paper that avoids discussing its limitations or fails to address unexpected findings lacks the intellectual honesty necessary for scholarly work.

Comparing with Existing Research

I always ensure that the manuscript positions its findings within the broader body of research. A solid paper clearly references and compares its results with existing literature, explaining how it advances, contradicts, or confirms prior studies. Ignoring relevant research or failing to contextualize the study's contribution is a red flag, as it suggests a lack of awareness of the field's ongoing developments.

Ethical Considerations

Finally, ethical considerations are paramount. I assess whether the authors have properly addressed ethical concerns related to the research, such as data usage, privacy risks, and participant consent. Ethical transparency is critical in maintaining trust in the research process, particularly in studies involving human subjects, sensitive data, or emerging technologies like AI and machine learning. A paper that does not adequately address ethical issues or lacks clarity about the study's limitations and assumptions cannot be considered trustworthy.

Example Application

While reviewing manuscripts few of them includes - ACM Transactions on Internet Technology (TOIT), ACM CODASPY, I have applied this framework to assess whether the research problems were clearly articulated, whether experimental results were reproducible, and whether architectural or system-level claims were grounded in sound reasoning and realistic assumptions. This method ensures that every submission, regardless of its complexity or presentation style, receives a consistent, fair, and rigorous evaluation.

Value of This Approach

By applying a structured evaluation framework in journal peer review, I reduce subjective bias, ensure consistency across reviews, and encourage higher standards of clarity, rigor, and transparency. More importantly, it guarantees that accepted research contributes meaningfully and reliably to advancing the field.

Industry Awards and Enterprise Competitions

Overview

Industry awards and business competitions judge technology by looking at how well it works in real life. They care about things like handling large numbers of users, being reliable, staying secure, working well with other systems, and showing clear business benefits. Unlike academic reviews that focus on new ideas or research methods, industry judges want to see if a solution is ready to use, works well in practice, and can provide lasting value.

This appendix documents how I apply the core evaluation dimensions when judging industry submissions across cloud-native platforms, AI systems, cybersecurity architectures, DevOps automation, and customer-facing digital service ecosystems.

Evaluation Emphasis in Industry Settings

In industry contexts, the same seven dimensions from the core framework are applied, with emphasis placed on those most predictive of real-world viability: scalability and performance under load, security and reliability by design, operational maturity, integration readiness, and evidence-supported impact.

When submission materials include benchmarks, deployment evidence, architecture diagrams, or security documentation, I evaluate that evidence directly. When such artifacts are not included, credibility is assessed based on the quality of engineering reasoning, architectural coherence, and the clarity of operational assumptions.

This approach enables consistent evaluation across entries with different levels of maturity and varying documentation quality, while keeping the focus on technical merit rather than presentation polish.

What I Assess Most Heavily

Scalability and Performance Credibility

For cloud-native and transaction-intensive solutions, I prioritize whether the system design is consistent with high-volume operation. The evaluation considers the presence and feasibility of scaling strategies, such as handling peak load, preventing bottlenecks, and reducing cascading failures. When available, performance evidence is examined, including benchmarks, latency and throughput claims with appropriate context, or workload characterization. The assessment also considers whether performance optimization is achieved through sound architectural design rather than fragile or narrowly tuned configurations.

Architecture Soundness for Distributed Systems

Across microservices and distributed architectures, the evaluation focuses on well-defined boundaries, clear responsibilities, and engineering trade-offs that are explicit and defensible. Strong submissions explain how components interact, how failures are handled, and how the architecture supports long-term maintainability and evolution. Where diagrams are available, I verify that the architecture presented is consistent with the system behaviors and claims described in the submission.

Reliability and Operational Maturity

Production readiness is strongly correlated with operational thinking. This evaluation focuses on whether a submission demonstrates awareness of observability, incident response, degradation behavior, and recovery patterns. When supporting materials include runbook-style guidance, monitoring approaches, deployment practices, or reliability-focused design choices, I treat these as meaningful signals of maturity. Where such materials are not provided, the assessment considers whether the system description reflects realistic operational assumptions.

Security Engineering and Risk Posture

For cybersecurity categories and security-sensitive transaction systems, I assess whether security controls are integrated into the system architecture rather than treated as a separate layer. The evaluation considers identity and access control practices, secure API design, data protection strategy, and overall threat awareness. When materials such as testing summaries, security claims, or control descriptions are provided, these are reviewed for internal consistency and alignment with common industry practices. Formal audits are not assumed unless they are explicitly included in the organizer-provided evaluation materials.

Integration Quality and Customer-Facing System Impact

For digital service ecosystems and API-driven customer experience platforms, I assess reliability, throughput, integration correctness, and measurable service improvement. The evaluation considers whether the solution improves customer outcomes through architectural clarity, effective automation, and operational stability rather than superficial feature breadth. When customer impact evidence is provided, it is treated as a meaningful signal of real-world adoption and readiness.

Responsible AI Adoption in Production Environments

For AI award categories, I evaluate whether AI capabilities are integrated responsibly into production-grade systems. The evaluation focuses on whether it is clear what the AI is intended to do, how it is monitored in operation, how failure scenarios are handled, and whether risks such as bias, privacy exposure, or unsafe automation are explicitly acknowledged and mitigated in a manner appropriate to the use case. When submissions provide supporting evidence—such as monitoring strategies, model governance explanations, or operational controls—that evidence is examined directly. When such materials are not provided, the assessment relies on the credibility of the proposed approach, as reflected in the system's engineering design and the transparency of its stated assumptions.

Typical Review Flow I Follow

The review process starts by identifying the core problem the submission aims to solve, the intended audience, and whether it fits the selected category. I then examine the technical aspects, including the system architecture, scalability approach, and how reliability and security are addressed, paying attention to the trade-offs made by the team. Next, the assessment focuses on production readiness—how easily the solution can be deployed, integrated, and operated in real-world environments. This step considers both the supporting materials provided and the credibility of the deployment narrative. Finally, the evaluation consolidates the overall strengths, highlights significant risks or gaps, and explains why the submission stands out in comparison to others.

Conclusion

Industry awards reward technology that can succeed in production securely, reliably, and at scale while delivering measurable value. My evaluation approach is designed to be consistent and defensible across diverse submission formats and maturity levels, ensuring that decisions are rooted in engineering quality, operational realism, and responsible system design.

Hackathons and Developer Challenges

Overview

Hackathons and developer challenges create a distinct evaluation context. Participants typically build under extreme time constraints, with limited resources, incomplete information, and rapid iteration. In this environment, the goal of judging is not to apply production standards to early-stage prototypes. Instead, the aim is to evaluate whether a team delivered a functional proof-of-concept, demonstrated sound engineering judgment for the time available, and presented a clear path from prototype to a credible product or system.

This appendix documents how I adapt the core evaluation dimensions to judge rapid prototypes fairly, while still holding entries to meaningful technical standards.

How Hackathon Judging Differs from Industry Awards

In industry awards, "production readiness" often carries significant weight because solutions are expected to operate at scale and under sustained real-world use. Hackathon submissions, by contrast, are frequently minimal viable implementations that prioritize proving an idea quickly. For this reason, I evaluate prototypes based on feasibility, clarity, and demonstrable execution rather than completeness.

Still, it's important to build things well. An idea that sounds exciting but doesn't work properly won't go far. On the other hand, a basic idea that works smoothly and is clearly explained can be worth more than a complicated idea that never really gets finished.

Core Evaluation Emphasis in Hackathons

Innovation and Problem Clarity (Highest Emphasis)

I assess whether the submission addresses a real problem with a creative approach, and whether the team can articulate the problem, the user need, and the "why now" behind the idea. In hackathons, novelty does not need to be academic novelty; it can be an original integration, an inventive workflow, a smart simplification, or a new way to apply a sponsor technology. What matters is that the value proposition is clear and the solution is meaningfully differentiated from generic templates.

Execution and Implementation Feasibility (Highest Emphasis)

This evaluation focuses on what was actually built within the given time constraints. A working demo is treated as a strong signal, particularly when it demonstrates real functionality rather than simulated outputs. The assessment also considers whether the technical choices are appropriate for a prototype stage and whether the solution shows a credible path toward becoming a maintainable system. The goal is to recognize teams that demonstrate sound practical engineering judgment under time pressure.

Architecture and Design Clarity (High Emphasis)

Even for prototypes, coherent design improves credibility. I assess whether the team can clearly explain how components work together, how responsibilities are separated, and why key design decisions were made. Enterprise-grade architecture is not expected; however, the evaluation looks for evidence that the solution was built with intention and thoughtful structure rather than pure improvisation.

Communication, User Experience, and Demo Quality (High Emphasis)

Hackathons are time-boxed and comparative; clarity matters. I evaluate whether the demo clearly shows what the solution does, whether the user flow is understandable, and whether the presentation communicates value to both technical and non-technical audiences. A strong submission makes it easy to understand what was built and why it matters, without relying on marketing language.

Technical Completeness Relative to Scope (Moderate Emphasis)

I assess whether the core claim of the submission is implemented versus mostly described. Given time limits, it is normal for edges to be unfinished. What differentiates strong projects is a disciplined scope that ensures the most important capability is real and demonstrable, even if secondary features are deferred.

Security and Scalability Awareness (Baseline Expectation)

Full threat models or production-grade scalability are not expected in hackathon entries. However, I look for basic engineering hygiene, such as avoiding exposed secrets, acknowledging identity and access considerations where applicable, and demonstrating awareness of how the system may need to evolve to handle greater load or real-world usage. At this stage, awareness and intent matter more than completeness.

Typical Review Flow I Follow

I follow a structured review flow that begins by understanding the problem the project is intended to solve and identifying its intended users. The evaluation checks whether the team's explanation aligns with what is demonstrated, and whether the core functionality works as described. The demo is reviewed to confirm that the implementation matches the stated design and technical approach. Clarifying questions are then considered around design choices, incomplete areas, and how the solution could evolve with more time. The final decision is based on the originality of the idea, how effectively it works, and its potential for future improvement, rather than on presentation polish alone.

What Strong Hackathon Submissions Tend to Demonstrate

From what I have seen, the best hackathon projects are not the ones with the most features. The strongest entries clearly show their main idea, have a working demo, and explain why their solution could work outside the hackathon.

Common Failure Patterns I Watch For

A frequent issue is over scoping i.e., attempting too many features and finishing none of them well. Another common pattern is a demo that relies on slides or partially mocked behavior without making that boundary explicit. Submissions can also lose credibility when the problem statement is unclear, when the implementation does not match the claims, or when basic security hygiene is ignored (for example, embedding secrets in code or exposing sensitive tokens in public repos). These issues are often preventable through disciplined scoping, honest labeling of what is real versus planned, and basic engineering care.

Conclusion

Hackathons reward creativity under pressure, but the best results combine creativity with disciplined execution. My judging approach aims to recognize teams that deliver functional proof-of-concepts, demonstrate sound technical judgment for the constraints, and present a credible path from prototype to a meaningful real-world solution.

Technical Book and Publication Reviews

Overview

Reviewing technical books and professional publications requires a different evaluation lens than reviewing software systems. A system can often be tested directly; a book must be assessed by the correctness of its reasoning, the reliability of its examples, the clarity of its teaching, and its ability to remain useful as tools and platforms evolve. In technical publishing, credibility is earned when content is not only accurate, but also teaches readers how to think, how to make engineering trade-offs, avoid failure modes, and apply concepts safely in real environments.

This appendix documents how I apply the core evaluation dimensions when reviewing technical books, tutorials, guides, and educational publications.

What I Evaluate in Technical Publications

Clarity, Structure, and Reader Outcomes

I assess whether the publication has a clear target audience and a well-defined learning path. The evaluation considers whether expectations are set early through stated prerequisites, clear scope boundaries, and explicit outcomes describing what the reader should be able to do by the end. The structure of the book itself is also examined, including how chapters build on one another, whether the progression is coherent, and whether complexity increases in a controlled and teachable way. A well-structured technical book behaves like a well-designed system: modular, navigable, and internally consistent.

Technical Accuracy and Soundness

Accuracy is non-negotiable. I verify that explanations align with established engineering principles and that claims are framed responsibly. Where code examples are included, they are treated as technical assertions that should be verifiable. Attention is given to whether examples were actually executed, whether steps are complete, and whether the content avoids promoting fragile or misleading patterns that only work under ideal conditions. For security-sensitive topics, particular care is taken to ensure the material does not normalize unsafe defaults or omit critical safeguards.

Practical Applicability and Production Realism

Technical publications are most valuable when they connect theory to real constraints. I evaluate whether examples reflect realistic scenarios rather than basic problems, and whether the author acknowledges operational considerations such as deployment, failure behavior, monitoring, performance trade-offs, cost awareness, and long-term maintainability. This is where many otherwise "correct" books fall short; they teach syntax without teaching engineering judgment. High-quality publications help readers understand not only what to do, but when to do it and when not to.

Design and Architecture Reasoning

For books that cover architecture, microservices, distributed systems, cloud platforms, or AI engineering, I assess whether the guidance is based on sound trade-offs rather than rigid rules. Strong writing makes those trade-offs explicit, such as latency versus consistency, simplicity versus flexibility, or autonomy versus governance and clearly distinguishes proven patterns from common anti-patterns. The evaluation checks if the content warns users against blindly copying methods by explaining when and why they might fail.

Durability and Version Discipline

Because tools evolve quickly, I evaluate whether content will remain valuable beyond a short trend cycle. Publications age well when they teach principles and clearly label version-specific details. When content depends on a particular framework version, API behavior, or feature set, strong works call that out explicitly and provide guidance for adapting as versions change.

Transparency, References, and Responsible Guidance

Credible technical writing shows its work. This evaluation examines whether facts are clearly distinguished from opinion and whether important claims are supported by reliable references, such as official documentation, recognized standards, primary sources, or well-accepted industry practices. In sensitive domains such as security, AI, and data engineering, I assess whether the work promotes responsible use, appropriately addresses privacy considerations when relevant, and alerts readers to misuse risks or common pitfalls.

How I Conduct a Review

I begin by evaluating the book's intent, scope, and audience alignment, including whether the table of contents presents a coherent learning path. The review then verifies technical claims and examples selectively but rigorously, focusing on representative chapters and core workflows rather than isolated snippets. When code is included, the assessment considers whether it can be reproduced with reasonable effort and whether the instructions are complete enough for a reader to succeed without guesswork. The review checks if the teaching is effective, specifically if hard topics are clear, if examples help you learn, and if the author explains the 'why' behind each method. In the final synthesis, the publication is evaluated relative to other works in the same domain, strengths are identified, the most consequential issues; particularly those related to correctness or safety are documented, and constructive guidance is provided that is actionable for authors and useful for readers.

What Excellent Technical Books Tend to Do Well

The best books make it clear who they are for and what they teach. They use real, working examples and explain their thinking. They show how things work in real life, not just in demo situations. Instead of just listing features, they help readers learn how to make good choices. They also explain when an approach works, when it might cause problems, and what other options are available. In other words, the best technical books do not only transfer knowledge, but they also build engineering judgment.

Common Failure Patterns I Watch For

A frequent issue is unverified code or incomplete steps, which turns learning into troubleshooting. Another issue is outdated guidance presented as current best practice, especially when security or reliability consequences are involved. Some publications over-index on novelty or breadth, sacrificing clarity and correctness. Others present strong opinions without acknowledging trade-offs, which can mislead less experienced readers into applying patterns in the wrong context. I take these problems seriously because they can lead to real issues later, like unsafe settings, systems that don't work well, and teams using methods they don't fully understand.

Conclusion

Technical books and professional publications shape how engineers build systems at scale. A high-quality review should help readers trust what they are learning and help authors strengthen the accuracy, clarity, and long-term value of their work. My review approach prioritizes correctness, teachability, practical engineering judgment, and responsible guidance, so that published technical content remains both credible and useful over time.

Versioning and Availability

- Version:

- v1.0

- Publication date:

- December 28, 2025

- Location:

- Publicly available at https://sibasispadhi.com/

- Intended use:

- Professional judging, peer review, award evaluation, and technical assessment

This methodology will be revised periodically as technology practices, security standards, and evaluation norms evolve.

Commitment to Excellence

This approach is intended to raise the standard for evaluating technology solutions across industry, academia, and competitive settings. It uses consistent and transparent criteria so that technical merit is valued over flashy demos and superficial claims, and so evaluations remain fair and effective. As an expert in Agentic AI, cloud-native and fintech technologies, I will continue to refine this approach as industry standards evolve and through my ongoing judging work, so it stays relevant and useful to the broader technology community.

Conclusion

This Technology Evaluation Framework represents a systematic approach to assessing technical solutions across professional contexts. The methodology prioritizes objective technical merit, consistent evaluation criteria, and transparent judgment to support fair and rigorous technology assessments in industry, academia, and competitive environments.